How to Survive an AI Apocalypse – Part 9: The Stabilization Effect

February 10, 2024 4 Comments

PREVIOUS: How to Survive an AI Apocalypse – Part 8: Fighting Back

Here’s where it gets fun.

Or goes off the rails, depending on your point of view.

AI meets Digital Philosophy meets Quantum Mechanics meets UFOs.

This entire blog series has been about surviving an AI-based Apocalypse, a very doomsday kind of event. For some experts, this is all but inevitable. You readers may be coming to a similar conclusion.

But haven’t we heard this before? Doomsday prophesies have been around as long as… Keith Richards. The Norse Ragnarök, The Hindu prophecy of the end of times during the current age of Kaliyuga, the Zoroastrian Renovation, and of course, the Christian Armageddon. An ancient Assyrian tablet dated 2800-2500 BCE tells of corruption and unruly teenagers and prophecies that “earth is in its final days; the world is slowly deteriorating into a corrupt society that will only end with its destruction.” Fast forward to the modern era, where the Industrial Revolution was going to lead to the world’s destruction. We have since had the energy crisis, the population crisis, and the doomsday clock ticking down to nuclear armageddon. None of it ever comes to pass.

Is the AI apocalypse more of the same, or is it frighteningly different in some way? This Part 9 of the series will examine such questions and present a startling conclusion that all may be well.

THE NUCLEAR APOCALYPSE

To get a handle on the likelihood of catastrophic end times, let’s take a deep dive into the the specter of a nuclear holocaust.

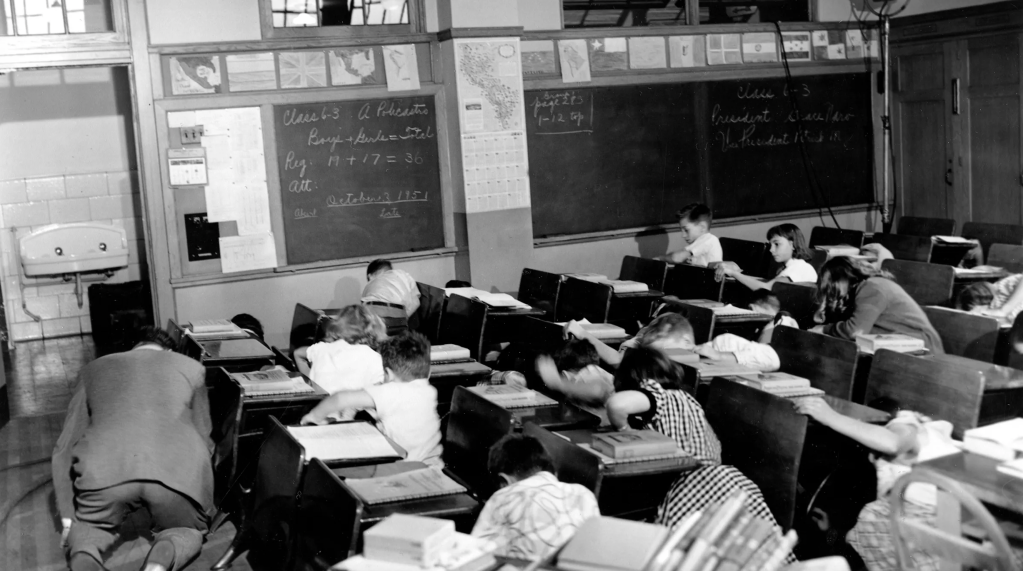

It’s hard for many of us to appreciate what a frightening time it was in the 1950s, as people built fallout shelters and children regularly executed duck and cover drills in the classrooms.

Often considered to be the most dangerous point of the cold war, the 1962 Cuban Missile Crisis was a standoff between the Soviet Union and the United States involving the deployment of Soviet missiles in Cuba. At one point the US Navy began dropping depth charges to force a nuclear-armed Soviet submarine to surface. The crew on the sub, having had no radio communication with the outside world didn’t know if war was breaking out or not. The captain, Valentin Savitsky, wanted to launch a nuclear weapon, but a unanimous decision among the three top officers was required for launch. Vasily Arkhipov, the second in command, was the sole dissenting vote and even got into an argument with the other two officers. His courage effectively prevented the nuclear war that was likely to result. Thomas S Blanton, later the director of the US National Security Archive called Arkhipov “the man who saved the world.”

But that wasn’t the only time we were a hair’s breadth away from the nuclear apocalypse.

On May 23, 1967, US military commanders issued a high alert due to what appeared to be jammed missile detection radars in Alaska, Greenland, and the UK. Considered to be an act of war, they authorized preparations for war, including the deployment of aircraft armed with nuclear weapons. Fortunately, a NORAD solar forecaster identified the reason for the jammed radar – a massive solar storm.

Then, on the other side of the red curtain, on 26 September 1983, with international tensions still high after the recent Soviet military shoot down of Korean Air Lines Flight 007, a nuclear early-warning system in Moscow reported that 5 ICBMs (intercontinental ballistic missiles) had been launched from the US. Lieutenant colonel Stanislav Petrov was the duty officer at the command center and suspected a false alarm, so he awaited confirmation before reporting, thereby disobeying Soviet protocol. He later said that had he not been on the shift at that time, his colleagues would have reported the missile launch, likely triggering a nuclear war.

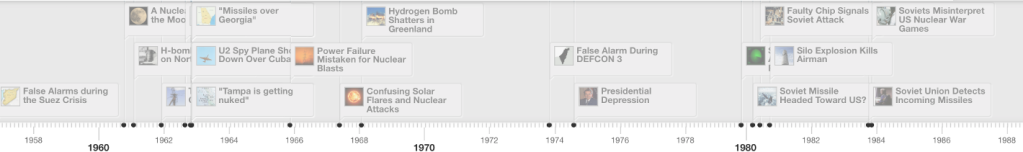

In fact, over the years there have been at least 21 nuclear war close calls, any of which could easily led to a nuclear conflagration and the destruction of humanity. The following timeline, courtesy of the Future of Life Institute, shows how many occurred in just the 30-year period from 1958 to 1988.

It kinds of makes you wonder what else could go wrong…

END OF SOCIETY PREDICTED

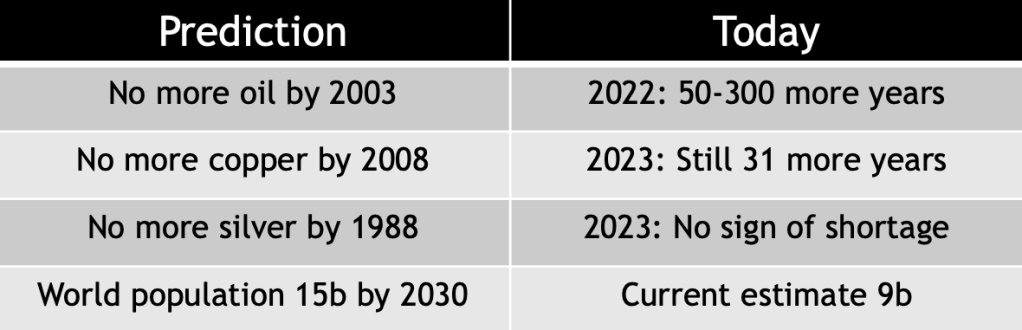

Another modern age apocalyptic fear was driven by the recognition that exponential growth and limited resources are ultimately incompatible. At the time, the world population was growing exponentially and important resources like oil and arable land were being depleted. The Rockefeller Foundation partnered with the OECD (Organization for Economic Cooperation and Development) to form The Club of Rome, a group of current and former heads of state, scientists, economists, and business leaders to discuss the problem and potential solutions. In 1972, with the support of computational modeling from MIT, they issued their first report, The Limits to Growth, which painted a bleak picture of the world’s future. Some of the predictions (and their ultimate outcomes) follow:

Another source for this scare was the book The Population Bomb by Stanford biologist Paul Ehrlich. He and people like Harvard biologist George Wald also made some dire predictions…

There is actually no end to failed environmental apocalyptic predictions – too many to list. But a brief smattering includes:

- “Unless we are extremely lucky, everyone will disappear in a cloud of blue steam in 20 years.” (New York Times, 1969)

- “UN official says rising seas to ‘obliterate nations’ by 2000.” (Associated Press, 1989)

- “Britain will Be Siberian in less than 20 years” (The Guardian, 2004)

- “Scientist Predicts a New Ice Age by 21th Century” (Boston Globe, 1970)

- “NASA scientist says we’re toast. In 5-10 years, the arctic will be ice free.” (Associated Press, 2008)

Y2K

And who could forget this apocalyptic gem…

My intent is not to cherry pick the poor predictions and make fun of them. It is simply that when we are swimming in the sea of impending doom, it is really hard to see the way out. And yet, there does always seem to be a way out.

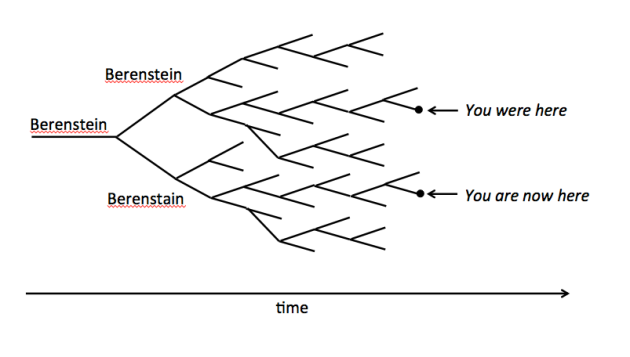

Sometimes it is mathematical. For example, there was a mathematical determination of when we would run out of oil based on known supply and rate of usage, perhaps factoring in the trend of increase in rate of usage. But what were not factored into the equation were the counter effects of the rate of new reserves being discovered and the improvements in engine efficiencies. One could argue that in the latter case, the scare achieved its purpose, just as the fear of global warming has resulted in a number of new environmental policies and laws, such as California’s upcoming ban on gasoline powered vehicles in 2035. However, that isn’t always the case. Many natural resources, for instance, seem to actually be increasing in supply. I am not necessarily arguing for something like the abiotic oil theory. However, at the macro level, doesn’t it sometimes feel like a game of civilization, where we are given a set of resources, cause and effect interrelationships, and ability to acquire certain skills. In the video game, when we fail on an apocalyptic level, we simply hit the reset button and start over. But in real life we can’t do that. Yet, doesn’t it seem like the “game makers” always hand us a way out, such as unheard of new technologies that are seemingly suddenly enabled? And it isn’t always human ingenuity that saves us? Sometimes, the right person is on duty at the perfect time against all odds. Sometimes, oil fields magically replenish on their own. Sometimes asteroids strike the most remote place on the planet.

THE STABILIZATION EFFECT

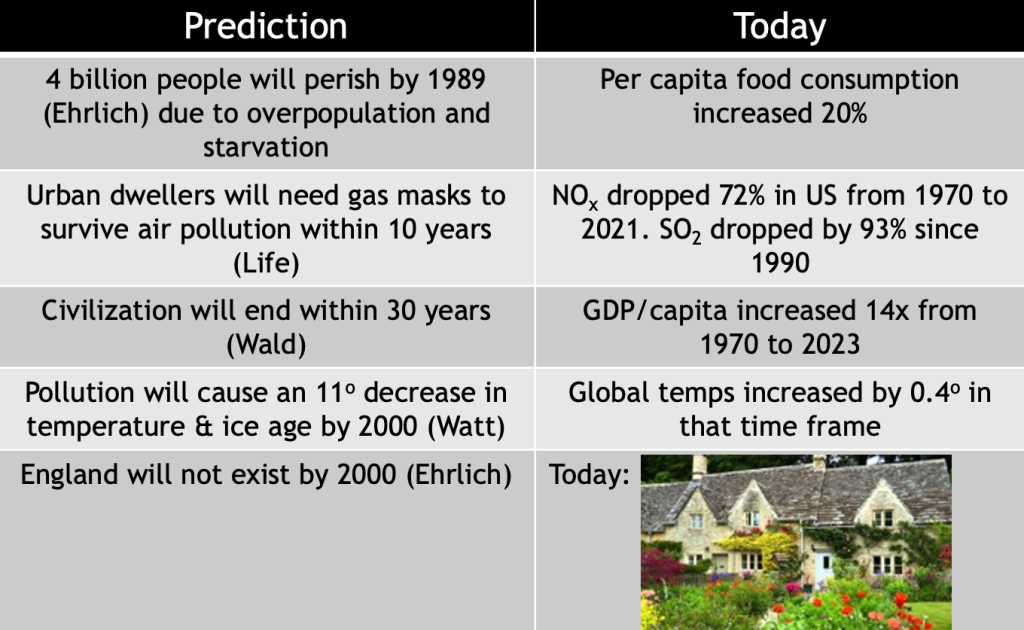

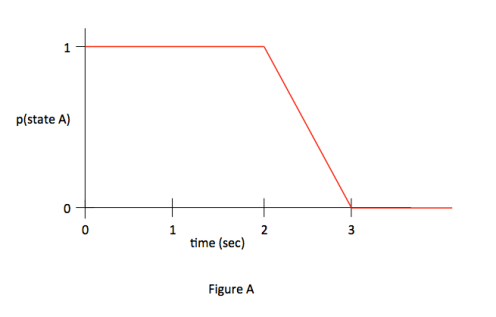

In fact, it seems statistically significant that apocalypses, while seemingly imminent, NEVER really occur. So much so that I decided to model it with a spreadsheet using random number generation (also demonstrating how weak my programming skills have gotten). The intent of the model is to encapsulate the state of humanity on a simple timeline using a parameter called “Mood” for lack of a better term. We start at a point in society that is neither euphoric (the Roaring Twenties) nor disastrous (the Great Depression). As time progresses, events occur that push the Mood in one direction or the other, with a 50/50 chance of either occurring. The assumption in this model is that no matter what the Mood is, it can still get better or worse with equal probability. Each of the following graphs depicts a randomly generated timeline.

On the graph are two thresholds – one of a positive nature, where things seemingly can’t get much better, and one of a negative nature, whereby all it should take is a nudge to send us down the path to disaster. In any of the situations we’ve discussed in this part of the series, when we are on the brink of apocalypse, the statistical likelihood that the situation would improve at that point should not be more than 50/50. If true, running a few simulations shows that an apocalypse is actually fairly likely. Figures 1 and 3 pop over the positive limit and then turn back toward neutral. Figure 2 seems to take off in the positive direction even after passing the limit. Figure 4 hits and goes through the negative limit several times, implying that if our reality actually worked this way, apocalyptic situations would actually be likely.

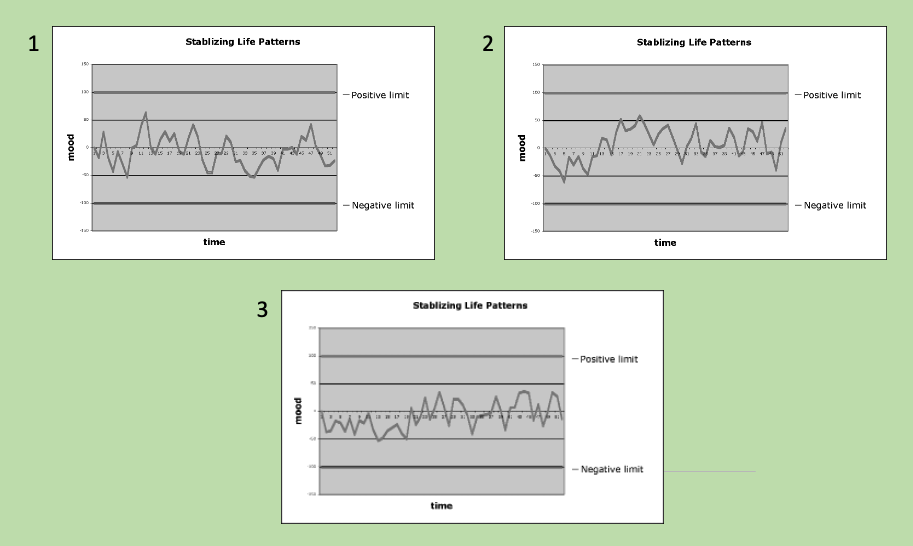

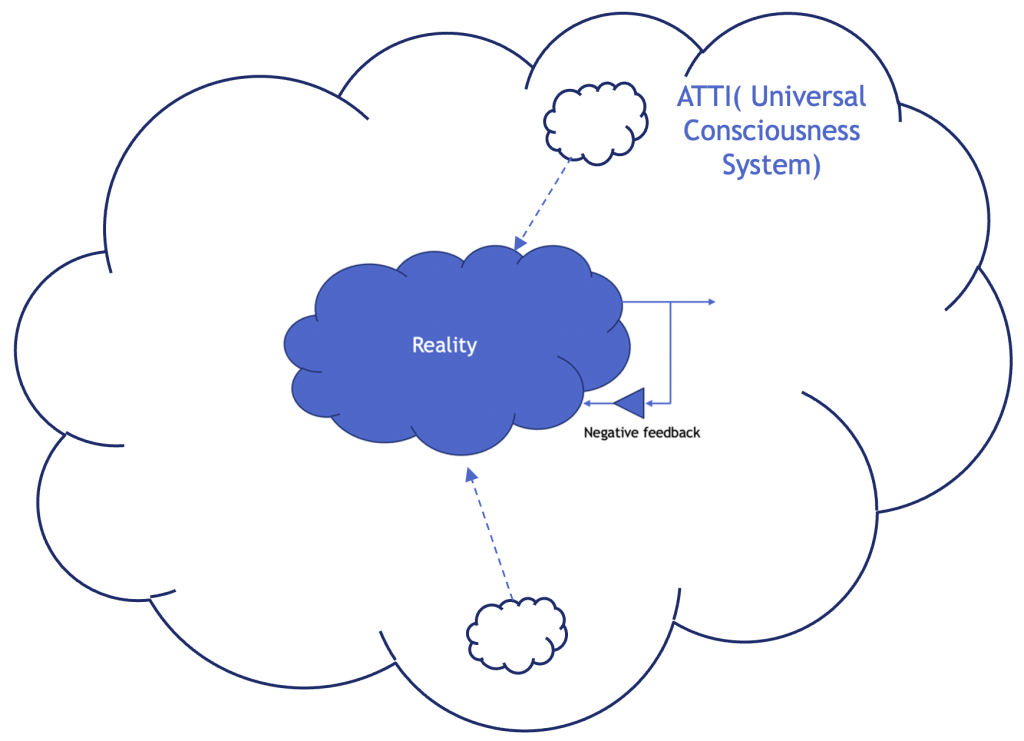

However, what always seems to happen is that when things get that bad, there is a stabilizing force of some sort. I made an adjustment to my reality model by inserting some negative feedback to model this stabilizing effect. For those unfamiliar with the term, complex systems can have positive or negative feedback loops; often both. Negative feedback tends to bring a system back to a stable state. Examples in the body include the maintenance of body temperature and blood sugar levels. If blood sugar gets too high, the pancreas secretes insulin which chemically reduces the level. When it gets too low, the pancreas secretes glucagon which increases the level. In nature, when the temperature gets high, cloud level increases, which provides the negative feedback needed to reduce the temperature. Positive feedback loops also exist in nature. The runaway greenhouse effect is a classic example.

When I applied the negative feedback to the reality model, all curves tended to stay within the positive and negative limits, as show below.

Doesn’t it feel like this is how our reality works at the most fundamental level? But how likely would it be that every aspect of our reality is subject to negative feedback? And where does that negative feedback come from?

REALITY IS ADAPTIVE

This is how I believe that reality works at its most fundamental level…

Why would that be? Two obvious ideas come to mind.

- Natural causes – this would be the viewpoint of reductionist materialist scientists. Heat increase causes ice sheets to melt which creates more water vapor, generating more clouds, reducing the heating effect of the sun. But this does not at all explain why the human condition, and the civilization trends that we’ve discussed in this article, always tend toward neutral.

- God – this would be the viewpoint of people whose beliefs are firmly grounded in their religion. God is always intervening to prevent catastrophes. But apparently God doesn’t mind minor catastrophes and plenty of pain and suffering in general. More importantly though, this does not explain dynamic reality generation.

DYNAMIC REALITY GENERATION

Enter Quantum Mechanics.

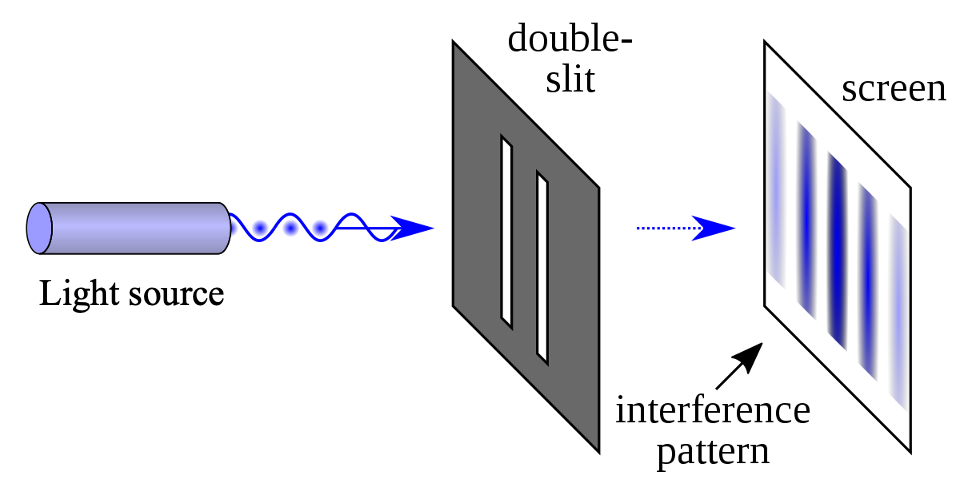

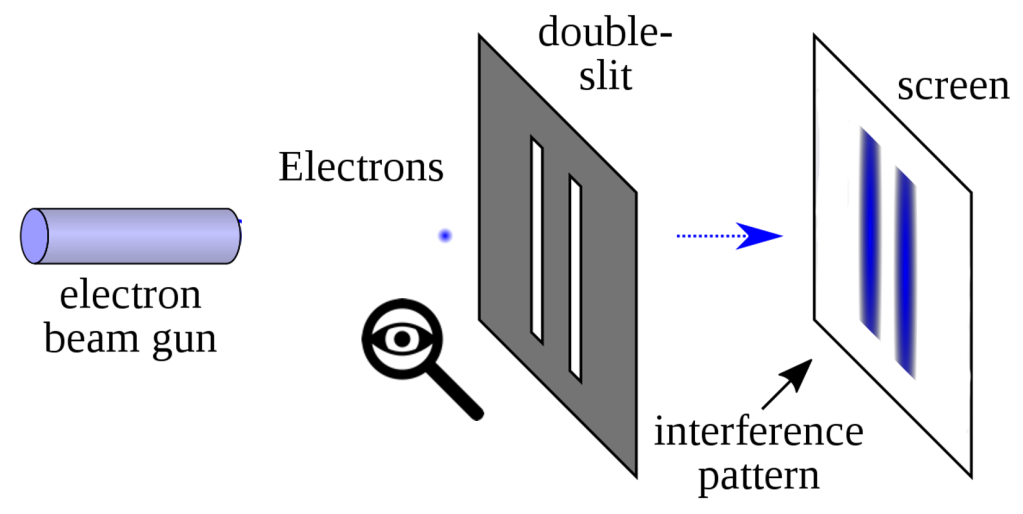

The Double-slit experiment was first done by Thomas Young back in 1801, and was an attempt to determine if light was composed of particles or waves. A beam of light was projected at a screen with two vertical slits. If light was composed of particles, only two bands of light should be on the phosphorescent screen behind the one with the slits. If wave-based, an interference pattern should result. The wave theory was initially confirmed experimentally, but that was later called into question by Einstein and others.

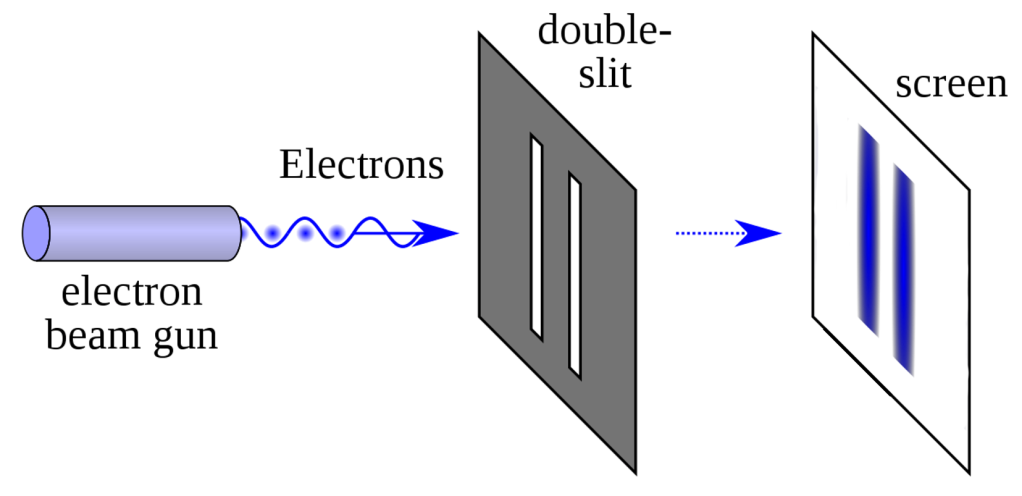

The experiment was later done with particles, like electrons, and it was clearly assumed that these would be shown to be hard fixed particles, generating the expected pattern shown on the right.

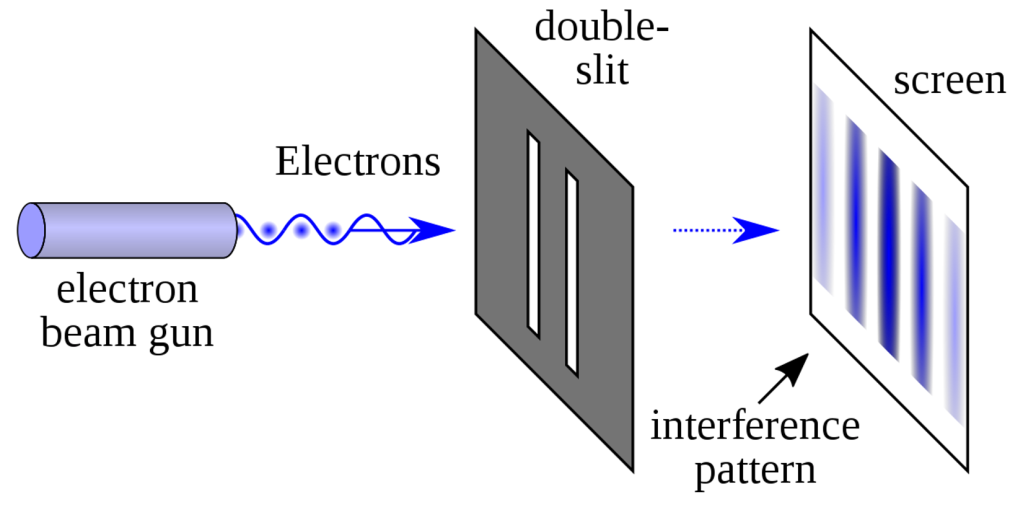

However, what resulted was an interference pattern, implying that the electrons were actually waves. Thinking that perhaps electrons were interfering with each other, the experiment was modified to shoot one electron at a time. And still the interference pattern slowly build up on the back screen.

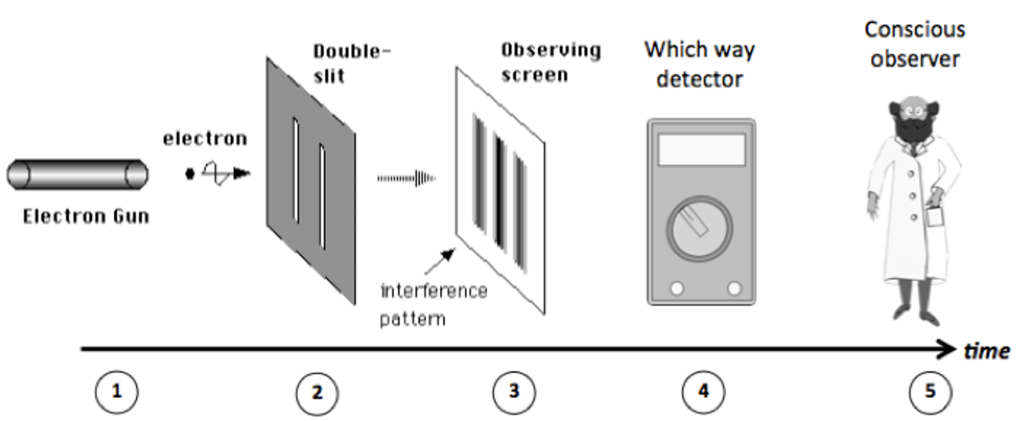

To make sense of the interference pattern, experimenters wondered if they could determine which slit each electron went through, so they put a detector before the double list. Et voila, the interference pattern disappeared! It was as if the actual conscious act of observation converted the electrons from waves to particles. The common interpretation was that the electrons actual exist only a probability function and the observation actually snaps them into existence.

It is very much like the old adage that a tree falling in the woods makes no sound unless someone is there to see it. Of course, this idea of putting consciousness as a parameter in the equations of physics generated no end of consternation for the deterministic materialists. They have spent the last twenty years designing experiments to disprove this “Observer Effect” to no avail. Even when the “which way” detector is place after the double slit, the interference pattern disappears. The only tenable conclusion is that reality does not exist in an objective manner and its instantiation depends on something. But what?

The diagram below helps us visualize the possibilities. When does reality come into existence?

Clearly it is not at points 1, 2 or 3, because it isn’t until the “which way” detector is installed that we see the shift in reality. So is it due to the detector itself or the conscious observer reading the results of the detector. One could image experiments where the results of the “which way” detector are hidden from the conscious observer for an arbitrary period of time; maybe printed out and put in an envelope without looking, where it sits on the shelf for a day while the interference pattern exists. And someone opens the envelope and suddenly the interference pattern disappears. I have always suspected that the answer will be that reality comes into existence at point 4. I believe that it is just logical that a reality generating universe be efficient. Recent experiments bear this out.

I believe this says something incredibly fundamental about the nature of our reality. But what would efficiency have to do with the nature of reality? Let’s explore a little further – what kinds of efficiencies would this lead to?

POP QUIZ! – is reality analog or digital? There is actually no conclusion to this question and many papers have been written in support of either point of view. But if our reality is created on some sort of underlying construct, there is only one answer – it has to be digital. Here’s why…

How much information would it take to fully describe the cup of coffee on the right?

In an analog reality, it would take an infinite amount of information.

In a digital reality, fully modeled at the Planck resolution (what some people think is the deepest possible digital resolution), it would require 4*1071 bits/second give or take. It’s a huge number for sure, but infinitely less than the analog case.

But wait a minute. Why would we need that level of information to describe a simple cup of coffee? So let’s ask a different question… How much information is needed for a subjective human experience of that cup of coffee – the smell, the taste, the visual experience. You don’t really need to know the position and momentum vector of each subatomic particle in each molecule of coffee in that cup. All you need to know is what it takes to experience it. The answer is roughly 1*109 bits/second. In other words, there could be as much as a 4*1062 factor of compression involved in generating a subjective experience. In other words, we don’t really need to know where each electron is in the coffee, just as you don’t need to know which slit each electron goes through in the double slit experiment. That is, UNTIL YOU MEASURE IT!

So, the baffling results of the double slit experiments actually make complete sense if reality is:

- Digital

- Compressed

- Dynamically generated to meet the needs of the inhabitants of that reality

Sounds computational doesn’t it? In fact, if reality were a computational system, it would make sense for it to need to have efficiencies at this level.

There are such systems – one well known example is a video game called No Man’s Sky that dynamically generates its universe as the user plays the game. Art inadvertently imitating life?

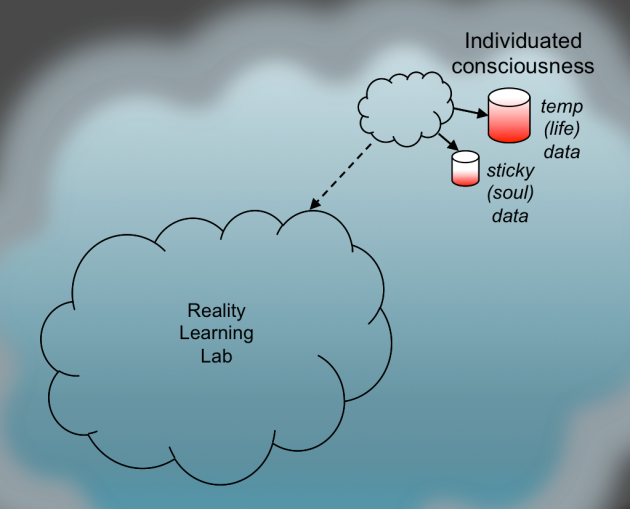

Earlier in this article I suggested that the concept of God could explain the stabilization effect of our reality. If we redefine “God” to mean “All That There Is” (of which, our apparent physical reality is only a part), reality becomes a “learning lab” that needs to be stable for our consciousnesses to interact virtually.

I wrote about this and proposed this model back in 2007 in my first book “The Universe-Solved!.” In 2021, an impressive set of physicists and technologists came up with the same theory, which they called “The Autodidactic Universe.” They collaborated to explore methods, structures, and topologies in which the universe might be learning and modifying its laws according to what is needed. Such ideas included neural nets and Restricted Boltzman Machines. This provides an entirely different way of looking at any potential apocalypse. And it make you wonder…

UFO INTERVENTION

In 2021, over one hundred military personnel, including Retired Air Force Captain Robert Salas, Retired First Lieutenant Robert Jacobs, and Retired Captain David Schindele met at the National Press Club in Washington, DC to present historical case evidence that UFOs have been involved with disarming nuclear missiles. A few examples…

- Malmstrom Air Force Base, Montana, 1967 – “a large glowing, pulsating red oval-shaped object hovering over the front gate,” as alarms went off showing nearly all 10 missiles shown in the control room had been disabled.

- Minot Air Force Base, North Dakota, 1966 – Eight airmen said that 10 missiles at silos in the vicinity all went down with guidance and control malfunctions when an 80- to 100-foot wide flying object with bright flashing lights had hovered over the site.

- Vandenberg Air Force Base, California, 1964 – “It went around the top of the warhead, fired a beam of light down on the top of the warhead.” After circling, it “then flew out the frame the same way it had come in.”

- Ukraine, 1982 – launch countdowns were activated for 15 seconds while a disc-shaped UFO hovered above the base, according to declassified KGB documents

As the History Channel reported, areas of high UFO activity are correlated with nuclear and military facilities worldwide.

Perhaps UFOs are an artifact of our physical reality learning lab, under the control of some conscious entity or possibly even an autonomous (AI) bot in the system. As part of the “autodidactic” programming mechanisms that maintain stability in our programmed reality. Other mechanisms could involve things like adjusting the availability of certain resources or even nudging consciousnesses toward solutions to problems. If this model of reality is accurate, we may find that we have little to worry about regarding an AI apocalypse. Instead it will just be another force that contributes toward our evolution.

To that end, there is also a sector of thinkers who recommend a different approach. Rather than fight the AI progression, or simply let the chips fall, we should welcome our AI overlords and merge with them. That scenario will be explored in Part 10 of this series.

NEXT: How to Survive an AI Apocalypse – Part 10: If You Can’t Beat ’em, Join ’em