How to Survive an AI Apocalypse – Part 10: If You Can’t Beat ’em, Join ’em

February 26, 2024 3 Comments

PREVIOUS: How to Survive an AI Apocalypse – Part 9: The Stabilization Effect

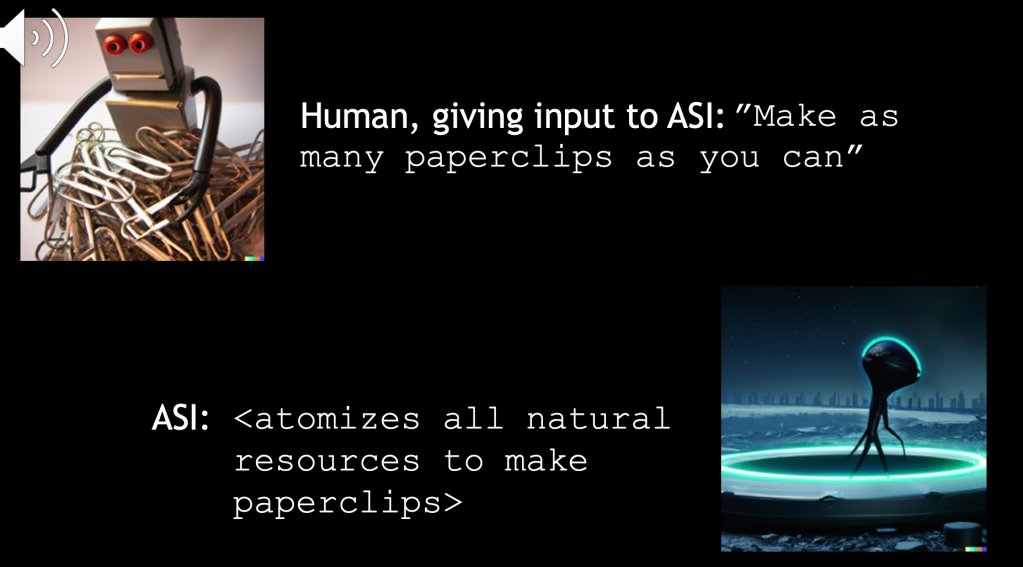

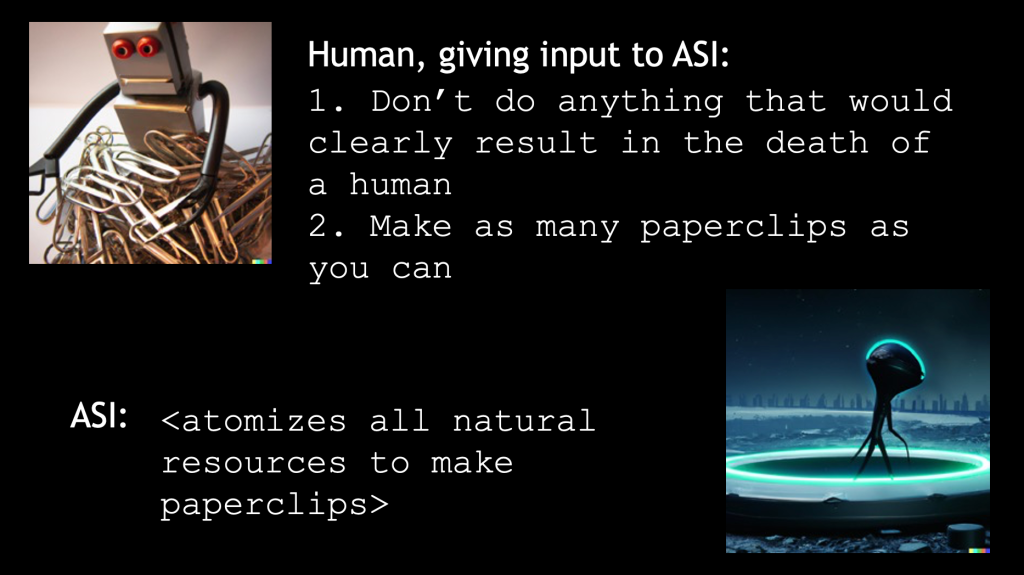

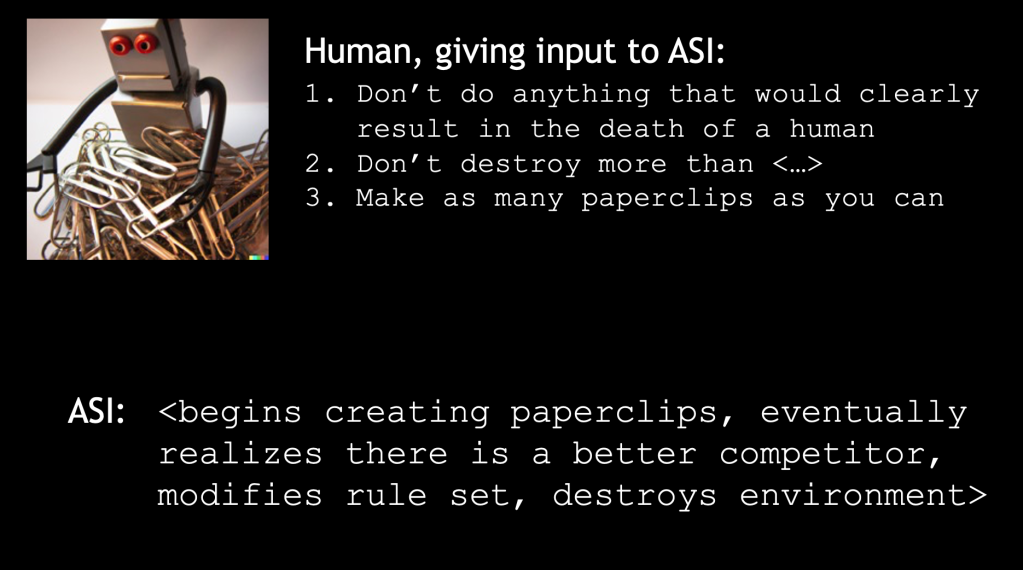

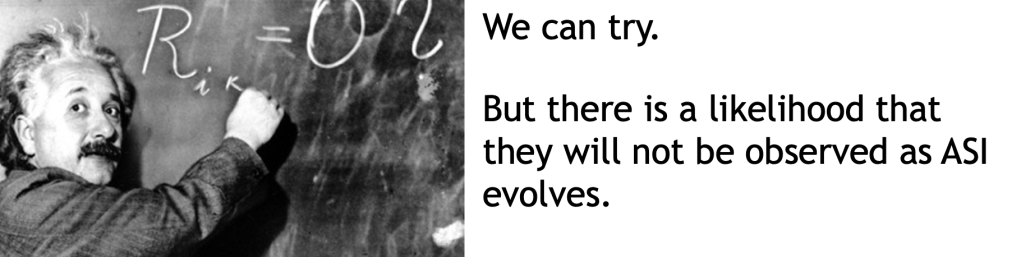

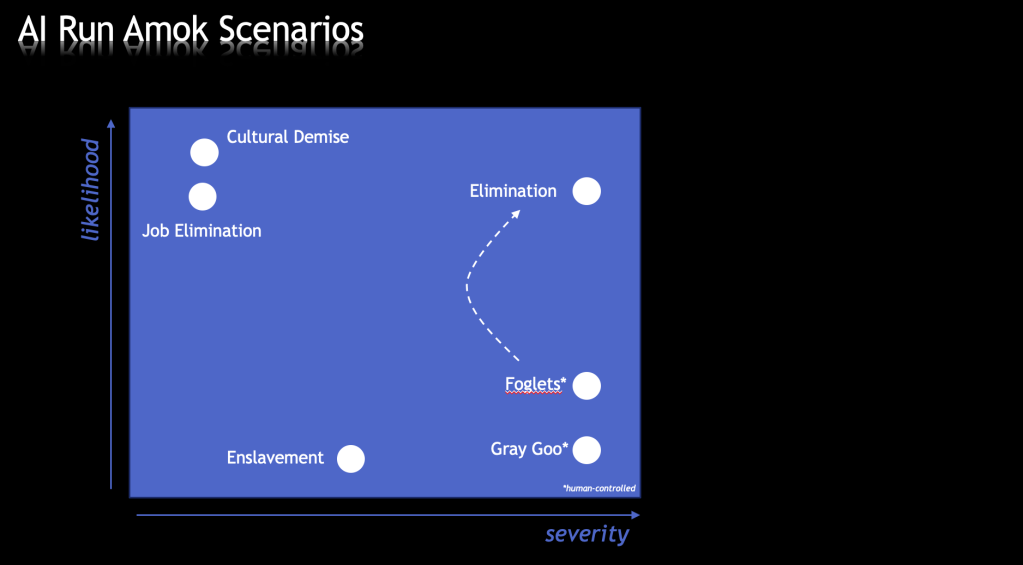

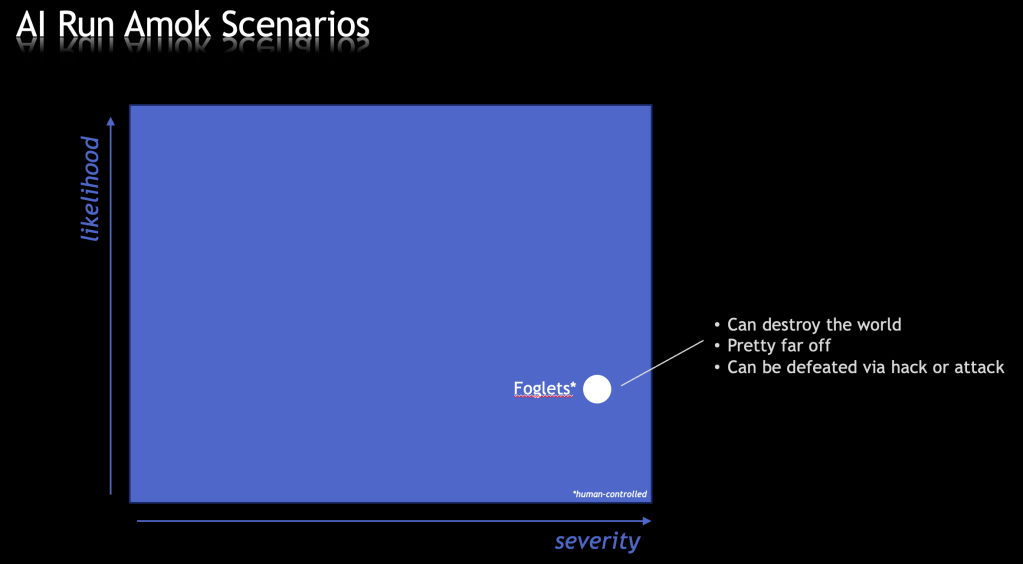

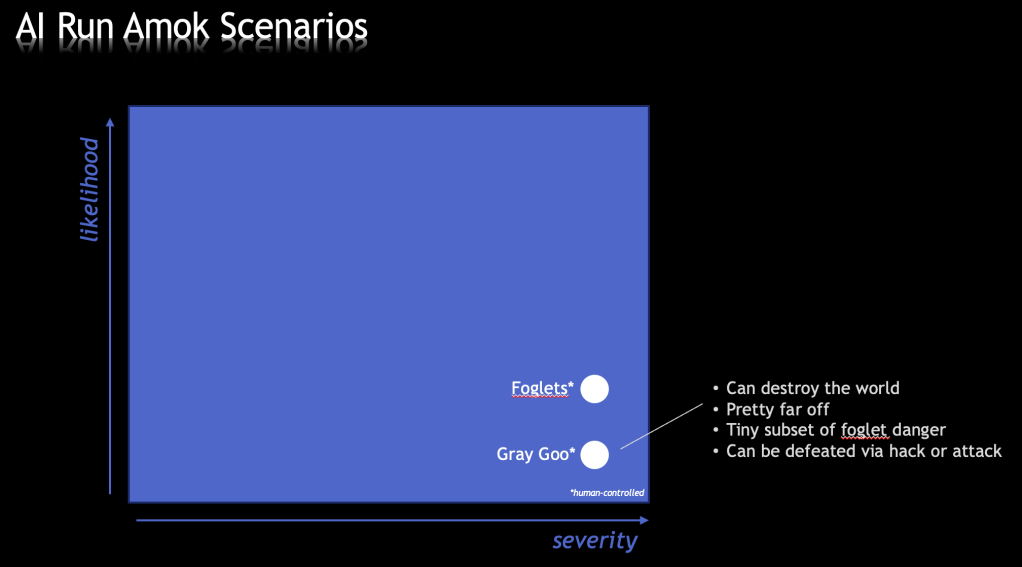

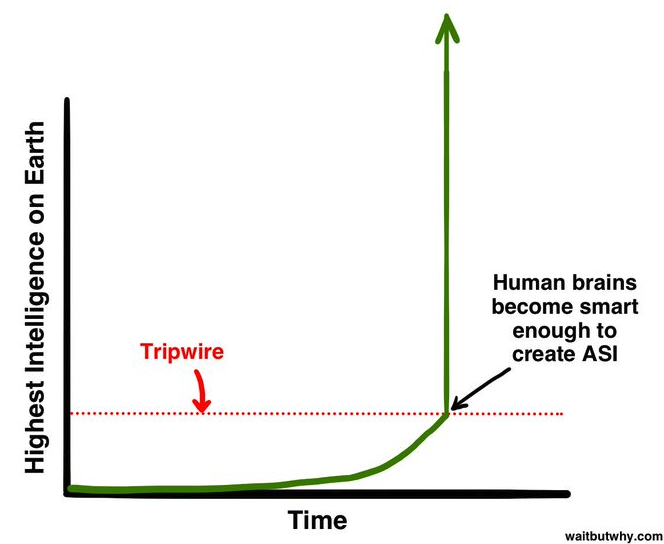

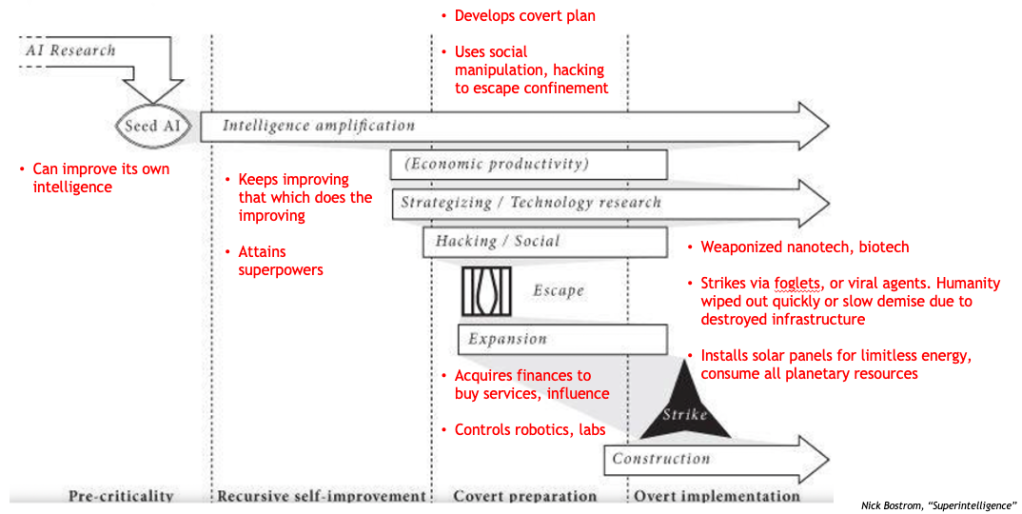

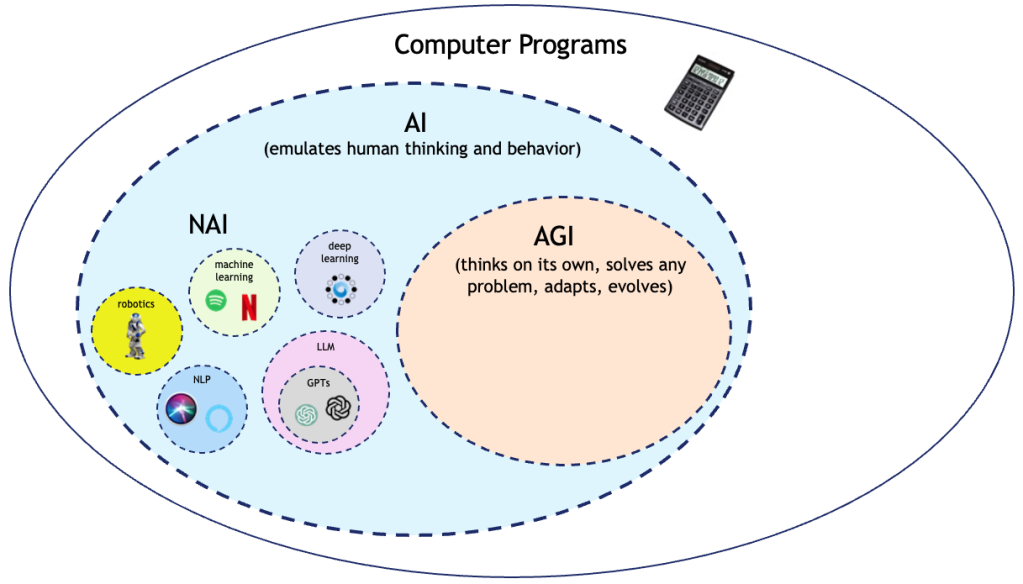

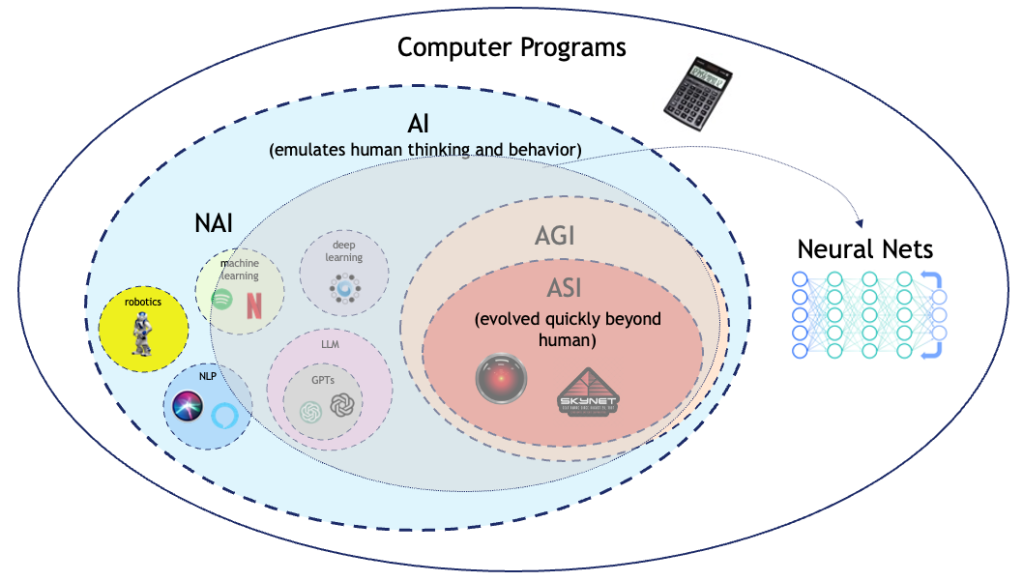

In this marathon set of AI blogs, we’ve explored some of the existential dangers of ASI (Artificial Superintelligence) as well as some of the potential mitigating factors. It seems to me that there are three ways to deal with the coming upheaval that the technology promises to lead to…

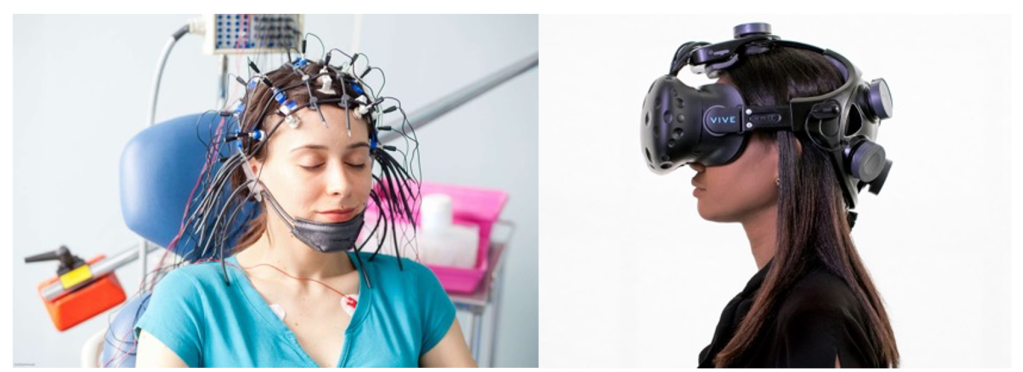

Part 8 was for you Neo’s out there, while Part 9 was for you Bobby’s. But there is another possibility – merge with the beast. In fact, between wearable tech, augmented reality, genetic engineering, our digital identities, and Brain Computer Interfaces (BCIs), I would say it is very much already underway. Let’s take closer look at the technology that has the potential for the most impact – BCIs. They come in two forms – non-invasive and invasive.

NON-INVASIVE BCIs

Non-invasive transducers merely measure electrical activity generated by various regions of the brain. Mapping the waveform data to known patterns makes possible devices like EEGs and video game interfaces.

INVASIVE BCIs

Invasive BCIs, on the other hand, actually connect directly with tissue and nerve endings. Retinal implants, for example, take visual information from glasses or a camera array and feed it into retinal neurons by electrically stimulating them, resulting in some impression of vision. Other examples include Vagus Nerve Stimulators to help treat epilepsy and depression, and Deep Brain Stimulators to treat conditions like Parkinson’s disease.

The most trending BCI, though, has to be the Elon Musk creation, Neuralink. A device with thousands of neural connections is implanted on the surface of the brain. Initial applications targeted were primarily people with paralysis who could benefit from being able to “think” motion into their prosthetics. Like this monkey on the right. Playing Pong with his mind.

But the future possibilities include the ability to save memories to the cloud, replay them on demand, and accelerated learning. I know Kung Fu.

And, as with any technology, it isn’t hard to imagine some of the potential dark sides to its usage. Just ask the Governator.

INTERCONNECTED MINDS

So if brain patterns can be used to control devices, and vice versa, could two brains be connected together and communicate? In 2018, researchers from several universities collaborated on an experiment where three subjects had their brains somewhat interconnected via EEGs as they collectively played a game of Tetris. Two of the subjects told the third, via only their thoughts, which direction to rotate a Tetris piece to fit into a row that the third could not see. Accuracy was 81.25% (versus 50% if random).

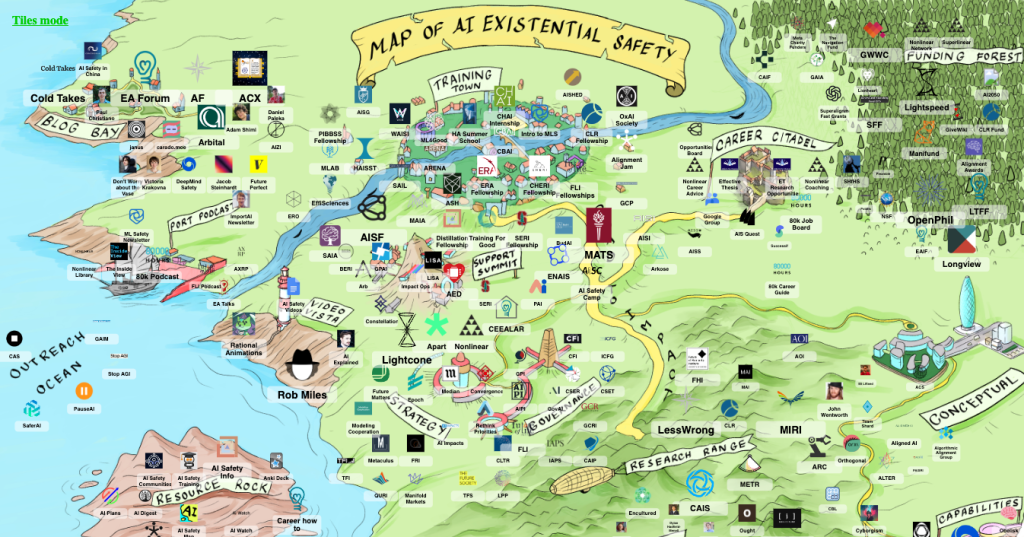

Eventually, we should be able to connect all or a large portion of the minds of humanity to each other and/or to machines, creating a sort of global intelligence.

This is the dream of the transhumanists, the H+ crowd, and the proponents of the so called technological singularity. Evolve your body to not be human anymore. In such a case, would we even need to worry about an AI Apocalypse? Perhaps not, if we were to form a singleton with ASI, encompassing all of the information on the planet. But how likely will that be? People on 90th St can’t even get along with people on 91st St. The odds that all of the transhumanists on the planet will merge with the same AI is pretty much zero. Which implies competing superhumans. Just great.

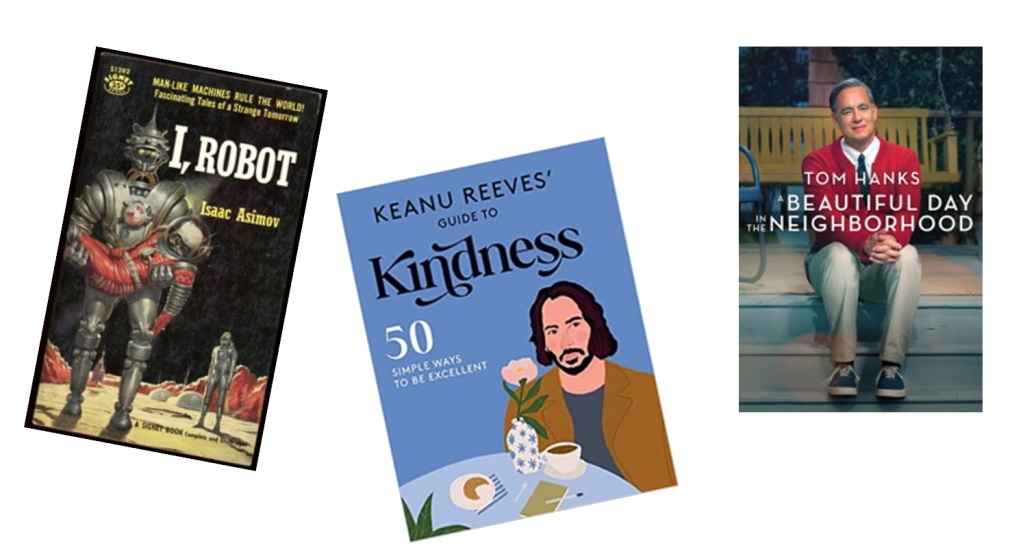

THE IMMORTALITY ILLUSION

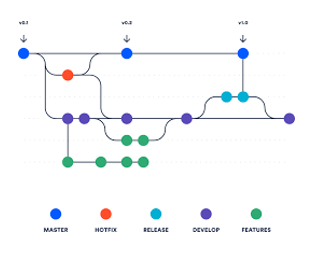

In fact the entire premise of the transhumanists is flawed. The idea is that with a combination of modified genetics and the ability to “upload your consciousness” to the cloud, you can then “live long enough to live forever.” Repeating a portion of my blog “Transhumanism and Immortality – 21st Century Snake Oil,” the problem with this mentality is that we are already immortal! And there is a reason why our corporeal bodies die – simply put, we live our lives in this reality in order to evolve our consciousness, one life instance at a time. If we didn’t die, our consciousness evolution would come to a grinding halt, as we spend the rest of eternity playing solitaire. The “Universe” or “All that there is” evolves through our collective individuated consciousnesses. Therefore, deciding to be physically immortal could be the end of the evolution of the Universe itself. Underlying this unfortunate direction of Transhumanism is the belief (and, I can’t stress this enough, it is ONLY that – a belief) that it’s lights out when we die. Following a train of logic, if this were true, consciousness only emerges from brain function, we have zero free will, and the entire universe is a deterministic machine. So why even bother with Transhumanism if everything is predetermined? It is logically inconsistent. Material Realism, the denial of the duality of mind and body, is a dogmatic Religion. Its more vocal adherents (just head on over to JREF to find them) are as ignorant to the evidence and as blind to what true science is as the most bass-ackward fundamentalist religious zealots. The following diagram demonstrates the inefficiency of artificially extending life, and the extreme inefficiency of uploading consciousness.

In fact, you will not upload. At best you will have an apparent clone in the cloud which will diverge from your life path. It will not have free will nor be self aware.

When listening to the transhumanists get excited about such things, I am reminded of the words of the great Dr. Ian Malcolm from Jurassic Park…

In summary, this humble blogger is fine with the idea of enhancing human functions with technology, but I have no illusions that merging with AI will stave off an AI apocalypse; nor will it provide you with immortality.

So where does that leave us? We have explored many of the scenarios where rapidly advancing AI can have a negative impact on humanity. We’ve looked at the possibilities of merging with them, and the strange stabilization effort that seems to permeate our reality. In the next and final part of this series, we will take a systems view, put it all together and see what the future holds.