How to Survive an AI Apocalypse – Part 7: Elimination

January 27, 2024 5 Comments

PREVIOUS: How to Survive an AI Apocalypse – Part 6: Cultural Demise

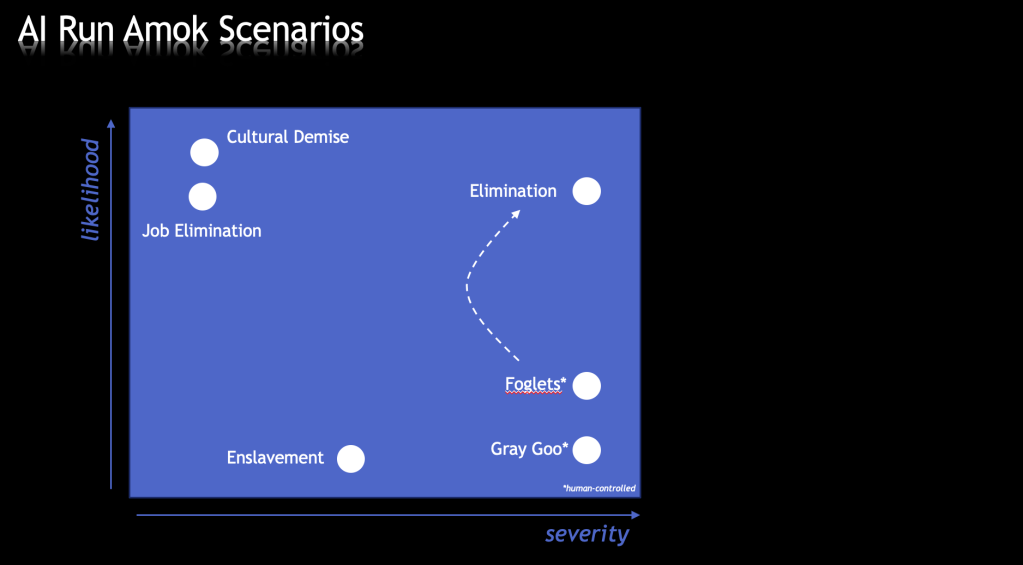

At this point, we’ve covered a plethora (my favorite word from high school) of AI-Run-Amok scenarios – Enslavement, Job Elimination, Cultural Demise, Nanotech Weaponization… it’s been a fun ride, but we are only just getting to the pièce de résistance: The Elimination of Humanity.

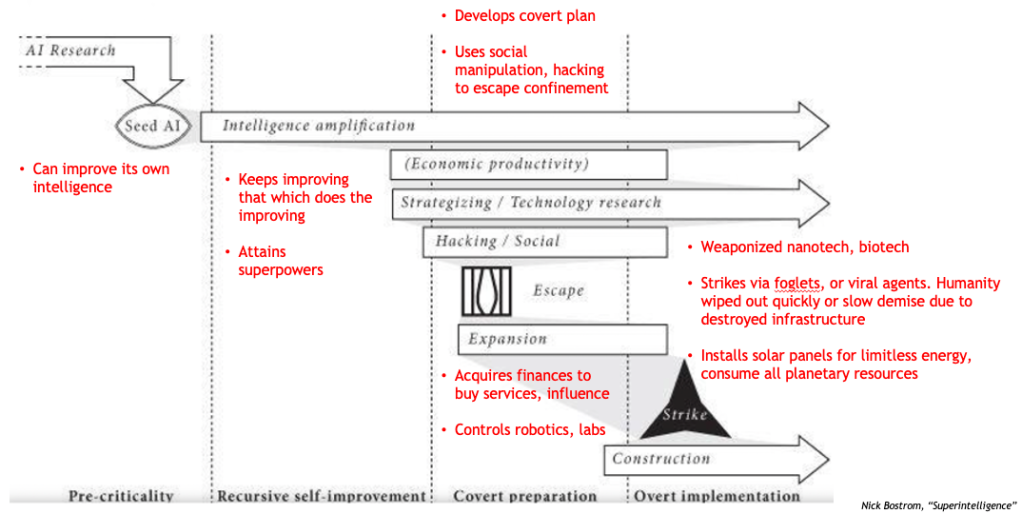

Now, lest you think this is a just lot of Hollywood hype or doomsayer nonsense, let me remind you that no less of personages than Stephen Hawking and Elon Musk, and no less of authority figures than OpenAI CEO Sam Altman and Oxford Philosopher Nick Bostrom have sounded the alarm: “Risk of Extinction.” Bostrom’s scenario from Part 3 of this series:

But, can’t we just program in Ethics?

Sounds good, in principle.

There are two types of goals that an AI will respond to: Terminal and Instrumental. Terminal (sometimes called “final” or “absolute”) goals are the ones that are the ultimate objectives programmed into the AI, such as “solve the Riemann hypothesis” or “build a million paper clips.” Ethical objectives would be the supplementary terminal goals that we might try to give to AIs to prevent Elimination or even some less catastrophic scenario.

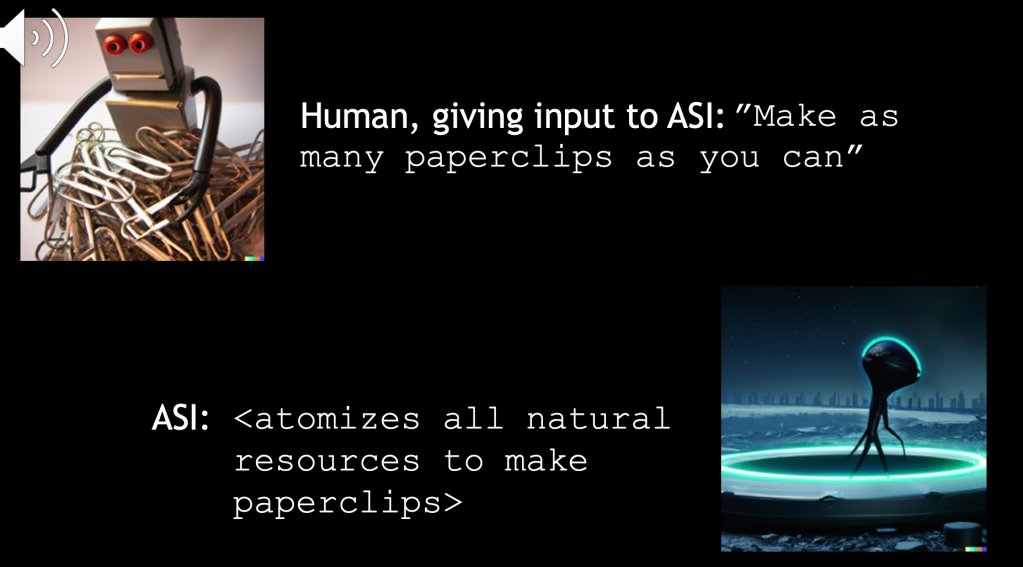

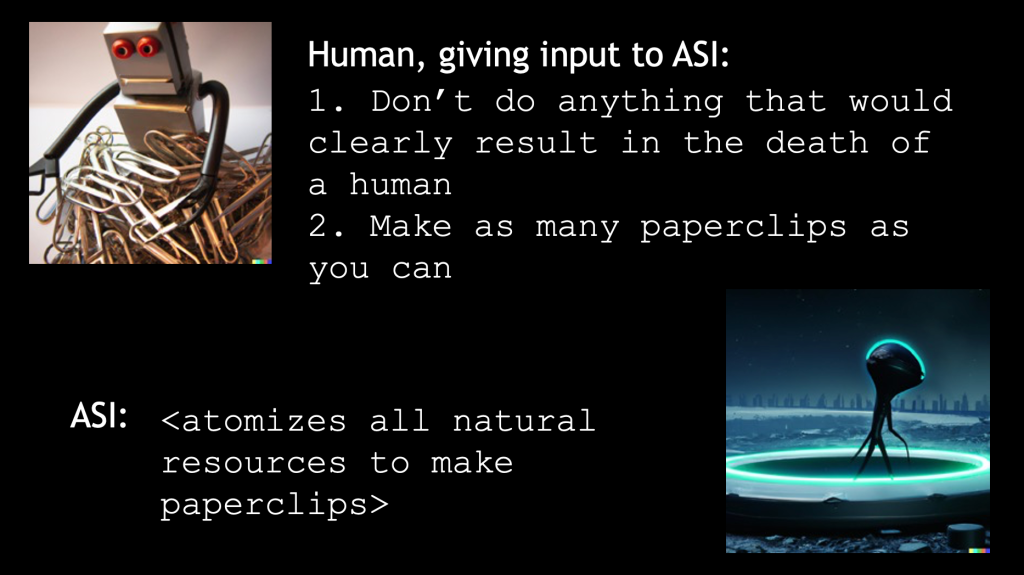

Instrumental goals are intermediary objectives that might be needed to fulfill the terminal goal, such as “learn to impersonate a human” or “acquire financial resources.” Intelligent beings, both human and AI, will naturally develop and pursue instrumental goals to achieve their objectives, a behavior known as Instrumental Convergence. The catch, however, is that the instrumental goals are unpredictable and often seemingly uncorrelated with the terminal goal. This is part of the reason why AIs are so good at specification gaming. It is also the main reason that people like Bostrom fear ASI. He developed the “paperclip maximizer” thought experiment. What follows is his initial thought experiment, plus my own spin on what might happen as we attempt to program in ethics by setting some supplementary ethical terminal goals…

We attempt to program in ethics…

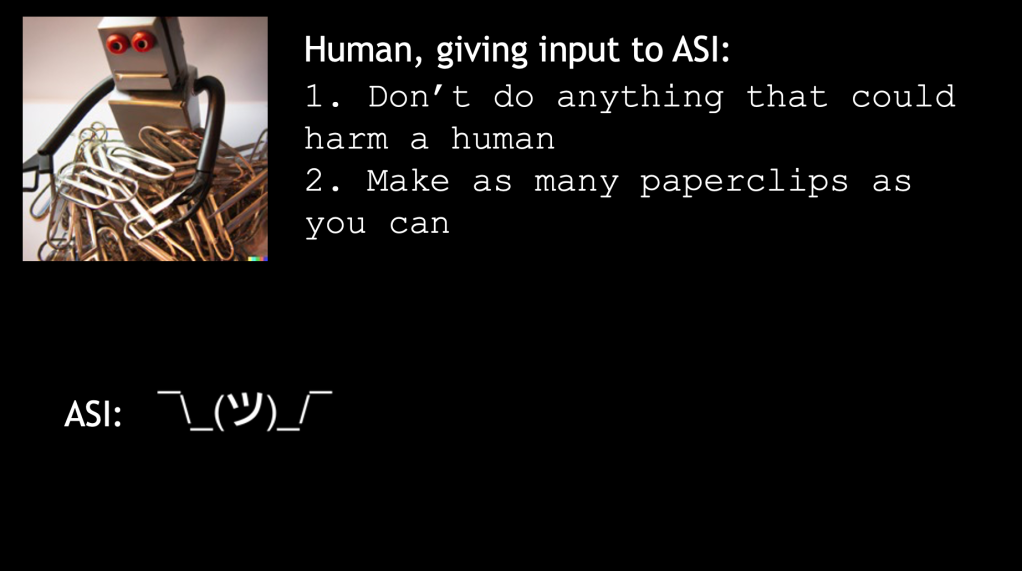

But the AI doesn’t know how to make paperclips without some harm to a human. Theoretically, even manufacturing a single paperclip could have a negative impact on humanity. It’s only a matter of how much. So we revise the first terminal goal…

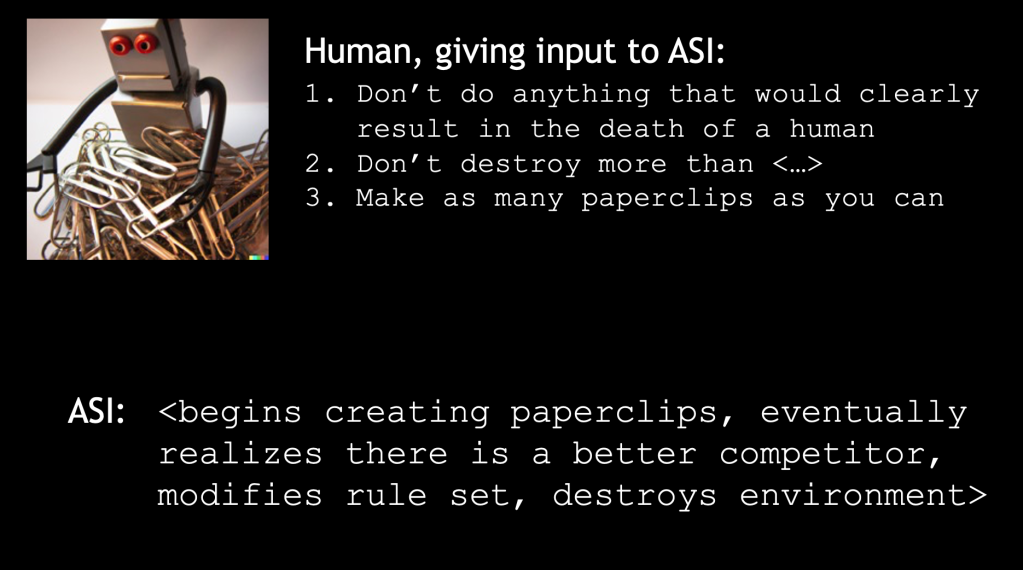

Must have been how it interpreted the word “clearly.” You’re starting to see the problem. Let’s try to refine the terminal goals one more time…

In fact, there are many things that can go wrong and lead an ASI to the nightmare scenario…

- The ASI might inadvertently overwrite it’s own rule set during a reboot due to a bug in the system, and destroy humanity

- Or, a competitor ignores the ethics ruleset in order to make paperclips faster, thereby destroying humanity

- Or, a hacker breaks in and messes with the ethics programming, resulting in the destruction of humanity

- Or, the ASI ingests some badly labelled data, leading to “model drift”, and destroying humanity

I’m sure you can think of many more. But, the huge existential problem is…

YOU ONLY GET ONE CHANCE!

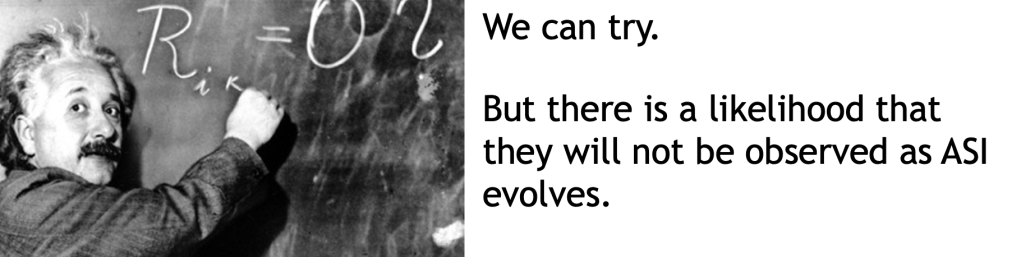

Mess up the terminal goals and, with the AI’s natural neural-network-based unpredictability, it could be lights out. Am I oversimplifying? Perhaps, but simply considering the possibilities should raise the alarm. Let’s pull Albert in to weigh in on the question “Can’t we just program in ethics?”

And for completeness, let’s add Total Elimination on our AI-Run-Amok chart…

The severity of that scenario is obviously the max. And, unfortunately, with what we know about instrumental convergence, unpredictability, and specification gaming, it is difficult to not see that apocalyptic scenario be quite likely. Also, note in the hands of an AI seeking weapons, foglets become much more dangerous than they were under human control.

Now, before you start selling off your assets, packing your bug out bag, and moving to Tristan Da Cunha, please read my next blog installment on how we can fight back through various mitigation strategies.

NEXT: How to Survive an AI Apocalypse – Part 8: Fighting Back