Will Evolving Minds Delay The AI Apocalypse? – Part II

February 10, 2019 2 Comments

The idea of an AI-driven Apocalypse is based on AI outpacing humanity in intelligence. The point at which that might happen depends on how fast AI evolves and how fast (or slow) humanity evolves.

In Part I of this article, I demonstrated how, given current trends in the advancement of Artificial Intelligence, any AI Apocalypse, Singularity, or what have you, is probably much further out that the transhumanists would have you believe.

In this part, we will examine the other half of the argument by considering the nature of the human mind and how it evolves. To do so, it is very instructive to consider the nature of the mind as a complex system and also the systemic nature of the environments that minds and AIs engage with, and are therefore measured by in terms of general intelligence or AGI.

David Snowden has developed a framework of categorizing systems called Cynefin. The four types of systems are:

- Simple – e.g. a bicycle. A Simple system is a simple deterministic system characterized by the fact that most anyone can make decisions and solve problems regarding such systems – it takes something called inferential intuition, which we all have. If the bicycle seat is loose, everyone knows that to fix it, you must look under the seat and find the hardware that needs tightening.

- Complicated – e.g. a car. Complicated systems are also deterministic systems, but unlike Simple systems, solutions to problems in this domain are not obvious and typically require analysis and/or experts to figure out what is wrong. That’s why you take your car to the mechanic and why we need software engineers to fix defects.

- Complex – Complex systems, while perhaps deterministic from a philosophical point of view, are not deterministic in any practical sense. No matter how much analysis you apply and no matter how experienced the expert is, they will not be able to completely analyze and solve a problem in a complex system. That is because such systems are subject to an incredibly complex set of interactions, inputs, dependencies, and feedback paths that all change continuously. So even if you could apply sufficient resources toward analyzing the entire system, by the time you got your result, your problem state would be obsolete. Examples of complex systems include ecosystems, traffic patterns, the stock market, and basically every single human interaction. Complex systems are best addressed through holistic intuition, which is something that humans possess when they are very experienced in the applicable domain. Problems in complex systems are best addressed by a method called Probe-Sense-Respond, which consists of probing (doing an experiment designed intuitively), sensing (observing the results of that experiment), and responding (acting on those results by moving the system in a positive direction).

- Chaotic – Chaotic systems are rarely occurring situations that are unpredictable because they are novel and therefore don’t follow any known patterns. An example would be the situation in New York City after 9/11. Responding to chaotic systems requires an even different method than with other types of systems. Typically, just taking some definitive form of action may be enough to move the system from Chaotic to Complex. The choice of action is a deeply intuitive decision that may be based on an incredibly deep, rich, and nuanced set of knowledge and experiences.

Complicated systems are ideal for early AI. Problems like the ones analyzed in Stanford’s AI Index, such as object detection, natural language parsing, language translation, speech recognition, theorem proving, and SAT solving are all Complicated systems. AI technology at the moment is focused mostly on such problems, not things in the Complex domain, which are instead best addressed by the human brain. However, as processing speed evolves, and learning algorithms evolve, AI will start addressing issues in the Complex domain. Initially, to program or guide the AI systems toward a good sense-and-respond model a human mind will be needed. Eventually perhaps, armed with vague instructions like “try intuitive experiments from a large set of creative ideas that may address the issue,” “figure out how to identify the metrics that indicate a positive result from the experiment,” “measure those metrics,” and “choose a course of action that furthers the positive direction of the quality of the system,” an AI may succeed at addressing problems in the Complex domain.

The human mind of course already has a huge head start. We are incredibly adept at seeing vague patterns, sensing the non-obvious, seeing the big picture, and drawing from collective experiences to select experiments to address complex problems.

Back to our original question, as we lead AI toward developing the skills and intuition to replicate such capabilities, will we be unable to evolve our thinking as well?

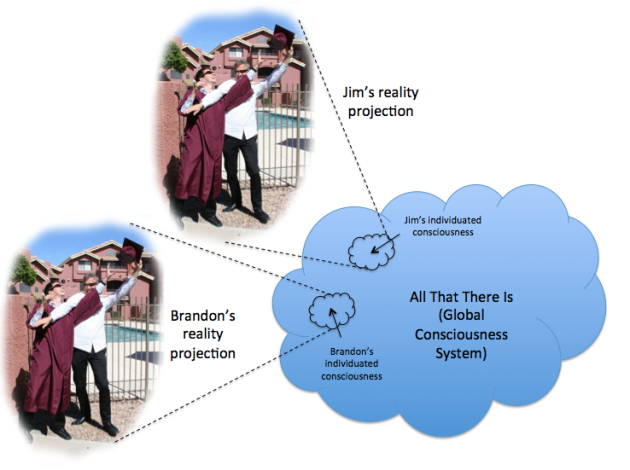

In the materialist paradigm, the brain is the limit for an evolving mind. This is why we think the AI can out evolve us, because the brain capacity is fixed. However, in “Digital Consciousness” I have presented a tremendous set of evidence that this is incorrect. In actuality, consciousness, and therefore the mind, is not emergent from the brain. Instead it exists in a deeper level of reality as shown in the Figure below.

It interacts with a separate piece of ATTI that I call the Reality Learning Lab (RLL), commonly known as “the reality we live in,” but more accurately described as our “apparent physical reality” – “apparent” because it is actually Virtual.

As discussed in my blog on creating souls, All That There Is (ATTI) has subdivided itself into components of individuated consciousness, each of which has a purpose to evolve. How it is constructed, and how the boundaries are formed that make it individuated is beyond our knowledge (at the moment).

So what then is our mind?

Simply put, it is organized information. As Tom Campbell eloquently expressed it, “The digital world, which subsumes the virtual physical world, consists only of organization – nothing else. Reality is organized bits.”

As such, what prevents it from evolving in the deeper reality of ATTI just as fast as we can evolve an AI here in the virtual reality of RLL?

Answer – NOTHING!

Don’t get hung up on the fixed complexity of the brain. All our brain is needed for is to emulate the processing mechanism that appears to handle sensory input and mental activity. By analogy, we might consider playing a virtual reality game. In this game we have an avatar and we need to interact with other players. Imagine that a key aspect of the game is the ability to throw a spear at a monster or to shoot an enemy. In our (apparent) physical reality, we would need an arm and a hand to be able to carry out that activity. But in the game, it is technically not required. Our avatar could be arm-less and when we have the need to throw something, we simply press a key sequence on the keyboard. A spear magically appears and gets hurled in the direction of the monster. Just as we don’t need a brain to be aware in our waking reality (because our consciousness is separate from RLL), we don’t need an arm to project a spear toward an enemy in the VR game.

On the other hand, having the arm on the avatar adds a great deal to the experience. For one thing, it adds complexity and meaning to the game. Pressing a key sequence does not have a lot of variability and it certainly doesn’t provide the player with much control. The ability to hit the target could be very precise, such as in the case where you simply point at the target and hit the key sequence. This is boring, requires little skill and ultimately provides no opportunity to develop a skill. On the other hand, the precision of your attack could be dependent on a random number generator, which adds complexity and variability to the game, but still doesn’t provide any opportunity to improve. Or, the precision of the attack could depend on some other nuance of the game, like secondary key sequences, or timing of key sequences, which, although providing the opportunity to develop a skill, have nothing to do with a consistent approach to throwing something. So, it is much better to have your avatar have an arm. In addition, this simply models the reality that you know, and people are comfortable with things that are familiar.

So it is with our brains. In our virtual world, the digital template that is our brain is incapable of doing anything in the “simulation” that it isn’t designed to do. The digital simulation that is the RLL must follow the rules of RLL physics much the way a “physics engine” provides the rules of RLL physics for a computer game. And these rules extend to brain function. Imagine if, in the 21st century, we had no scientific explanation for how we process sensory input or make mental decisions because there was no brain in our bodies. Would that be a “reality” that we could believe in? So, in our level of reality that we call waking reality, we need a brain.

But that brain “template” doesn’t limit the ability for our mind to evolve any more than the lack of brain or central nervous system prevents a collection of single celled organisms called a slime mold from actually learning.

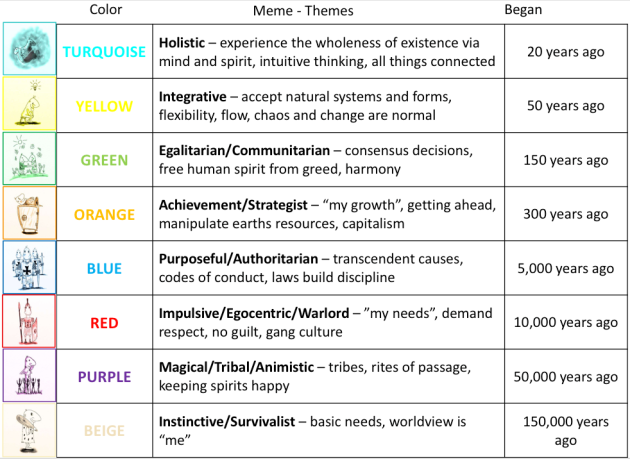

In fact, there is some good evidence for the idea that our minds are evolving as rapidly as technology. Spiral Dynamics is a model of the evolution of values and culture that can be applied to individuals, institutions, and all of humanity. The figure below depicts a very high level overview of the stages, or memes, depicted by the model.

Each of these stages represents a shift in values, culture, and thinking, as compared to the previous. Given that it is the human mind that drives these changes, it is fair to say that the progression models the evolution of the human mind. As can be seen by the timeframes associated with the first appearance of each stage of humanity, this is an exponential progression. In fact, this is the same kind of progression that Transhumanists used to prove exponential progression of technology and AI. This exponential progression of mind would seem to defy the logic that our minds, if based on fixed neurological wiring, are incapable of exponential development.

And so, higher level conscious thought and logic can easily evolve in the human mind in the truer reality, which may very well keep us ahead of the AI that we are creating in our little virtual reality. The trick is in letting go of our limiting assumptions that it cannot be done, and developing protocols for mental evolution.

So, maybe hold off on buying those front row tickets to the Singularity.