How to Survive an AI Apocalypse – Part 4: AI Run Amok Scenarios

January 19, 2024 5 Comments

PREVIOUS: How to Survive an AI Apocalypse – Part 3: How Real is the Hype?

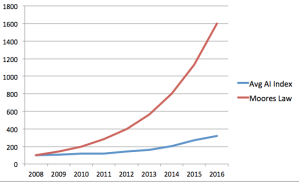

In Part 3 of this series on Surviving an AI Apocalypse, we examined some of the elements of AI-related publicity and propaganda that pervade the media these days and considered how likely they are. The conclusion was that while much has been overstated, there is still a real existential danger in the current path toward creating AGI, Artificial General Intelligence. In this and some subsequent parts of the series, we will look at several “AI Run Amok” scenarios and outcomes and categorize them according to likelihood and severity.

NANOTECH FOGLETS

Nanotech, or the technology of things at the scale of 10E-9 meters, is a technology originally envisioned by scientist Richard Feynman and popularized by K Eric Drexler in his book Engines of Creation. It has the potential to accomplish amazing things (think, solve global warming or render all nukes inert) but also, like any great technology, to lead to catastrophic outcomes.

Computer Scientist J Storrs Hall upped the ante on nanotech potential with the idea of “utility fog,” based on huge swarms of nanobots under networked AI-programmatic control.

With such a technology, one could conceivably do cool and useful things like press a button and convert your living room into a bedroom at night, as all of the nanobots reconfigure themselves into beds and nightstands, and then back to a living room in the morning.

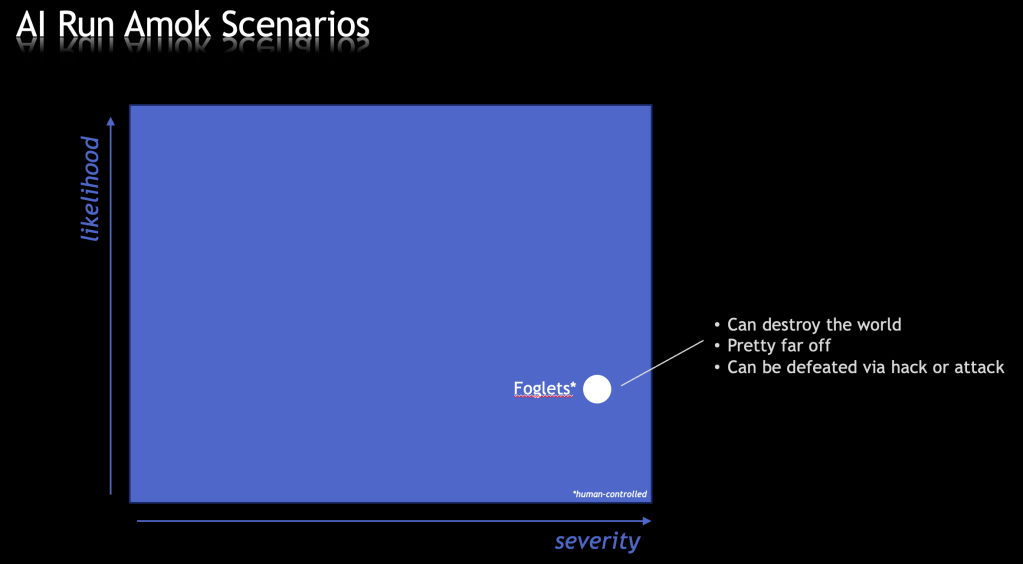

And of course, like any new tech, utility fog could be weaponized – carrying toxic agents, forming explosives, generating critical nuclear reactions, blocking out the sun from an entire country, etc. Limited only by imagination. Where does this sit in our Likelihood/Severity space?

I put it in the lower right because, while the potential consequences of foglets in the hands of a bad actor could be severe, it’s probably way too soon to worry about, such technology being quite far off. In addition, an attack could be defeated via a hack or a counter attack and, as with the cybersecurity battle, it will almost always be won by the entity with the deeper pockets, which will presumably be the world government by the time such tech is available.

GREY GOO

A special case of foglet danger is the concept of grey goo, whereby the nanobots are programmed with two simple instructions:

- Consume what you can of your environment

- Continuously self replicate and give your replicants the same instructions

The result would be a slow liquefaction of the entire world.

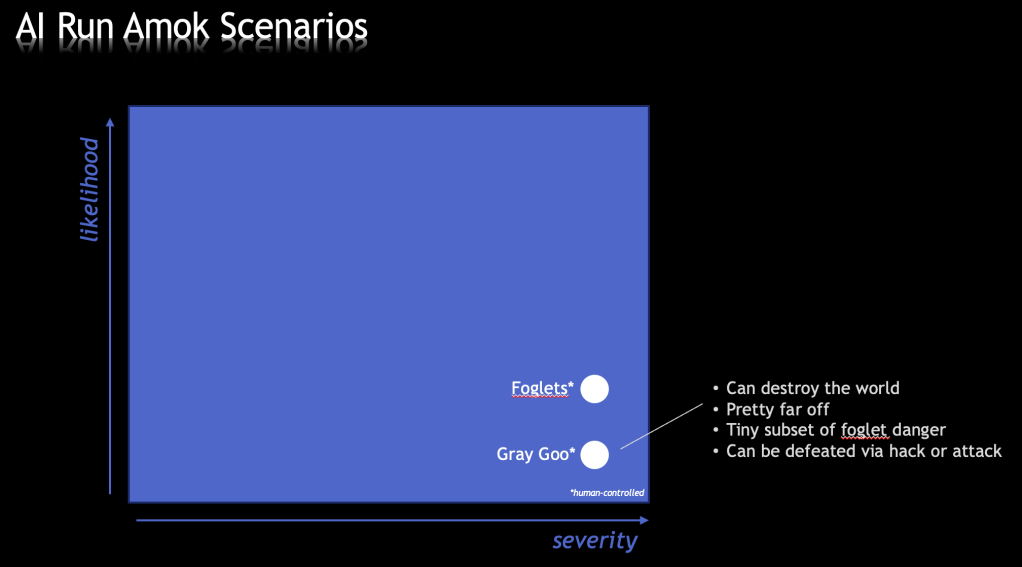

Let’s add this to our AI Run Amok chart…

I put it in the same relative space as the foglet danger in general, even less likely because the counter attack could be pretty simple reprogramming. Note, however, that this assumes that the deployment of such technologies, while AI-based at their core, is being done by humans. In the hands of an ASI, the situation would be completely different, as we will see.

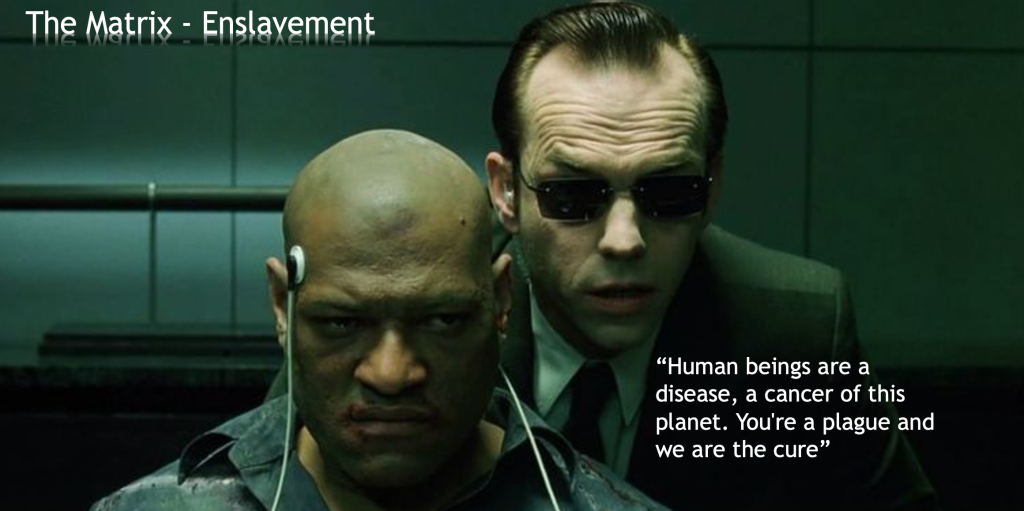

ENSLAVEMENT

Let’s look at one more scenario, most aptly represented by the movie, The Matrix, where AI enslaved humanity to be used, for some odd reason, as a source of energy. Agent Smith, anyone?

There may be other reasons that AI might want to keep us around. But honestly, why bother? Sad to say, but what would an ASI really need us for?

So I put the likelihood very low. And frankly, if we were enslaved, Matrix-style, is the severity that bad? Like Cipher said, “Ignorance is bliss.”

If you’re feeling good about things now, don’t worry, we haven’t gotten to the scary stuff yet. Stay tuned.

In the next post, I’ll look at a scenario near and dear to all of our hearts, and at the top of the Likelihood scale, since it is already underway – Job Elimination.

NEXT: How to Survive an AI Apocalypse – Part 5: Job Elimination